From people reading to machines learning – how Gaia-x enables digital cultural heritage

This article is a transcription of the talk of the same name on the first experiences of publishing data from the ITR of CrossAsia in Gaia-X and making it available to research and, above all, to the digital humanities. The presentation was given on October 11th 2023 at the Europeana Tech Conference 2023 in The Hague.

The starting point of the journey towards Gaia-X is the CrossAsia Website. CrossAsia is the portal for which the Staatsbibliothek zu Berlin (State Library of Berlin) is responsible and in which all services of the DFG-funded Specialised Information Service Asia (FID Asien) and other services are bundled. The specialised information service is aimed at scholars from the humanities related to Asia and focuses on East, Central and Southeast Asia. The Staatsbibliothek not only collects materials from and about these Asian regions, but also decided in favour of an e-preferred strategy more than 20 years ago. Whenever an electronic medium such as a journal or book can be permanently licenced, the electronic document is licensed rather than a printed copy. In addition to cross-regional or national access rights, the State Library also endeavours to obtain the rights for local archiving of the documents, including text and data mining rights, following negotiations. In addition to the entries in the reference systems, the management of licensed data such as image data, full texts, film and sound documents is of particular importance. One of the first services developed from the management of digital objects is the CrossAsia-Fulltext Search. In addition to its own digitised and full-text objects, this is based on licensed objects from publishers and other providers. These are mainly text and image materials from historical sources, from books and scientific articles to current newspapers. To date, materials in English and Chinese have been represented in particular; the range is also being continuously expanded with regard to the other languages.

All this content is archived in the so-called Integrated Text Repository (ITR). The technical basis for this is an infrastructure based on the Fedora Repository Software. At the same time, we make the content searchable via a Solr-Index. This currently contains almost 70 million documents and is a unique collection of licence-free and licensed digital resources in Asian Studies worldwide.

For this reason, many scientists have a great interest in discovering patterns in this content, recognising new connections and gaining new insights with their own algorithms and programmes through text and data mining. The FID Asia has the right to allow its users to carry out such analyses directly. However, this raises the question of how code and content come together?

One obvious idea would be an additional service that would enable scientists to download entire collections from the ITR. However, the amount of data in the ITR is very large, which means that several terabytes of data may have to be downloaded. Depending on the network speed, this can take weeks. In addition, the further distribution of the data becomes uncontrollable with the download, which is extremely critical for all licenced content and can lead to breaches of contract. This in turn creates problems with publishers with whom the Staatsbibliothek has concluded contracts. After all, a licence agreement is based on mutual trust, which is why stricter access control to the data is necessary.

Another idea is for researchers to come to the State Library and use their own laptop to connect directly to the ITR via the SBB’s IT infrastructure and then carry out the relevant analyses. This is not always feasible for reasons of time and cost, especially if a long journey is necessary. And even in this case, it must be checked whether data is being withdrawn from the ITR on too large a scale and the State Library is thus losing control of the obligations it has entered into in the licence agreements.

An additional problem is the computer resources required, e.g. for training in the context of machine learning, which a simple laptop cannot provide. The availability of the necessary computing power can therefore also be a problem.

Based on this problem, we started looking for a way to open up our data and our ITR for text and data mining. That’s when we came across Gaia-X. Gaia-X is a European initiative for an independent cloud infrastructure, whereby it is more of a framework than another cloud platform like Amazon Webservices or Google, which is divided into different domains.

The most important features of the Gaia-X framework are listed below:

- Full sovereignty over your own data. Control over the data always remains with the owner.

- Decentralisation, i.e. there is no central point in the network that is necessary for it to function.

- A set of rules to create trust and special services (available as open source) that can be used by all network participants to check whether others are also complying with the rules. Gaia-X is therefore a federated system with implicit trust.

- Gaia-X is interoperable as there is no prescribed technical infrastructure for participation.

- With Gaia-X, it is possible to create subject-specific dataspaces and link them together.

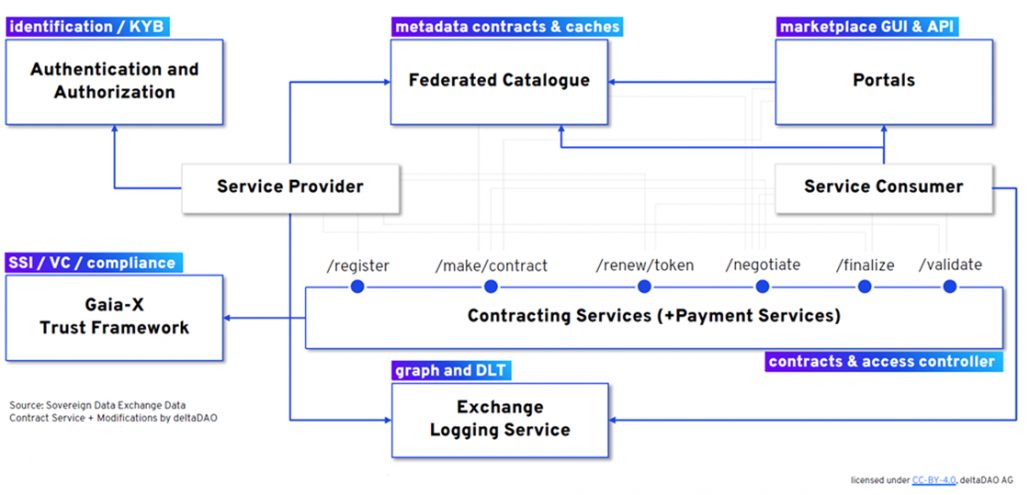

Figure 1 shows an overview of the Gaia-X domain, which we have looked at in more detail. On the left is the Trust Framework with the associated federated services that can be used to check the compliance of all participants. At the top right are the portals that represent the entry point to the subject-specific dataspaces in Gaia-X.

Everything that is published in a portal is listed in the federated catalogue, which can be seen in the illustration next to the portals. This catalogue is independent of the portals and contains information about all assets in Gaia-X. Users visit a portal and see the content in a subject-specific view of the federated catalogue, depending on the portal. However, the content is not tied to the portal and can be used anytime and anywhere in the Gaia-X network. This is another reason why Gaia-X can be described as decentralised.

To find out how these features can help us to enable text and data mining in ITR, we launched a proof-of-concept. The result was a dedicated CrossAsia Portal in Gaia-X. The contents of such a portal are so-called service offerings in Gaia-X. This can be either a Dataset or an Algorithm. A dataset can be downloaded securely, which means that the URL of the dataset is never visible. At the same time, the total number of downloads can be specified, for example.

Another option is to activate compute-to-data for a dataset. This allows users to link the data with a published algorithm and start a compute job. Users only receive the results of their compute job, not the data itself. In this way, we can offer the data from our ITR for text and data mining without anyone having to download or move data.

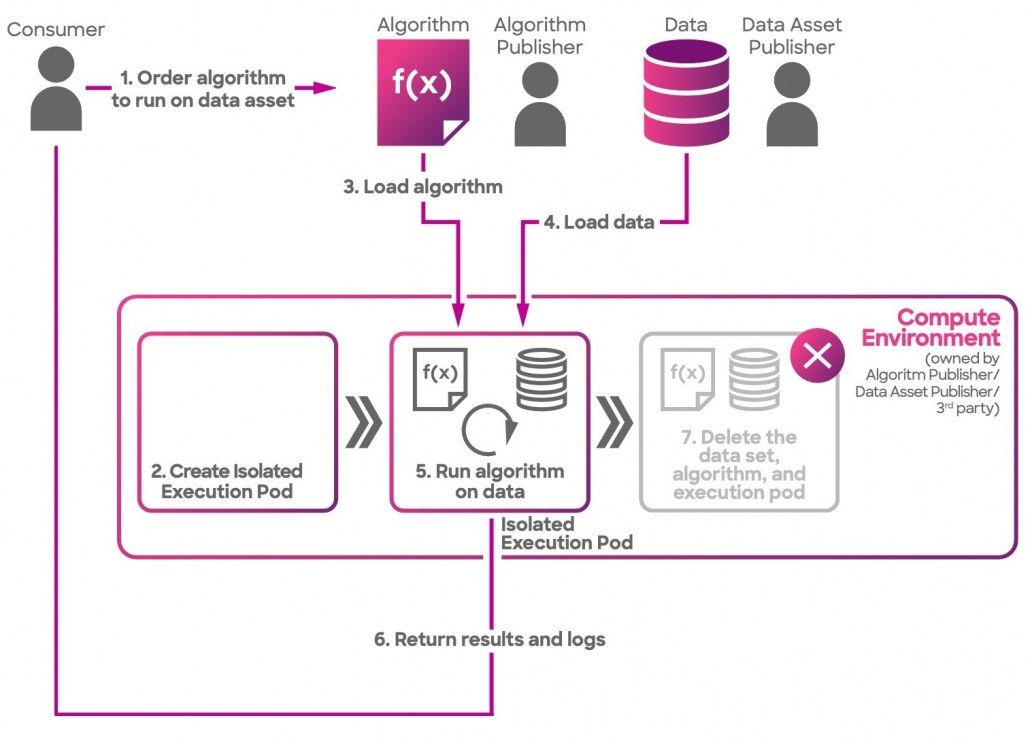

This works because the Ocean Protocol forms the technical basis of Gaia-X. Figure 2 shows a simplified technical process for compute-to-data. The steps are relatively simple: first, the users search for the data and the algorithm from the federated catalogue (provided they have the appropriate access rights). The data and algorithm are then loaded into an isolated execution pod that starts within a Kubernetes environment. Only the results of the algorithm and log files for execution are then made available to the user. At the end, the execution pod is deleted.

Publishing datasets and algorithms and combining them has worked as described in theory. The proof-of-concept can therefore be considered a success: a library can publish datasets in Gaia-X, scientists can publish an algorithm and combine the two via the portal. The desired results are made available without jeopardising the security of the data – no downloads are necessary and all data remains with the institution that published it

However, when setting up the portal for the first time, it must be recognised that some improvements are still necessary before the solution can be used to a good extent. Anyone who wants to try out the portal will find that getting started with the Gaia-X network is not easy and requires some explanation. As the database of the federated catalogue is a distributed ledger, a wallet is required for identification and granting rights. A wallet (e.g. MetaMask) must therefore be installed and configured in the browser. After joining the network, however, the publication of datasets and algorithms is quite simple, even if the use of data or the start of a compute job requires a series of confirmations of certain transactions on the ledger.

To summarise, Gaia-X is an interesting new opportunity for GLAM institutions to offer their data worthy of protection. Gaia-X is currently still strongly driven by economic and industrial interests with a strong commercial orientation. Nevertheless, we have decided to continue our activities in Gaia-X and develop the proof-of-concept into a pilot application, mainly due to the good results. We are working on initial improvements to the user experience and will soon be carrying out further use cases with a scientific focus. We are also engaged in the Gaia-X and Ocean Protocol community in order to better enable non-commercial use cases in Gaia-X and to further develop Gaia-X into a scientific ecosystem for specialised data spaces.

Based on our experience from the proof-of-concept, we would like to suggest cultural heritage institutions to think about how Gaia-X and the Ocean Protocol can support them in becoming a full-stack data provider. And not just a data provider for metadata to find cultural artefacts, not just a data provider for texts to read, audios to listen to, images or videos to watch or research data to analyse. Rather, it is a data provider that also offers such cultural data in high quality for algorithms and networks for machine learning and – if necessary – retains sovereignty over the data.

Currently, large language models are heavily controlled by large companies such as OpenAI, Google or Facebook. However, if everyone is given the opportunity to train their own models with data from GLAM institutions, machine learning can be democratised. Because everyone has access to the data they need for their algorithms – either to free data or, in the case of licensed data, where there is a corresponding right of access and licence. New approaches such as Federated Learning can help and even greatly simplify the process. Our aim is to improve the training of artificial intelligence by opening up our digital reading rooms to the algorithms and not just utilising the new possibilities of artificial intelligence itself.

References

- Figure 1 DeltaDAO AG https://www.delta-dao.com/ with many thanks for creating the SBB portal

- Figure 2 Ocean Protocol Foundation https://docs.oceanprotocol.com/

- Title image Europeana Foundation

If you are interested in trying the portal, please contact x-asia(at)sbb.spk-berlin.de for support if necessary.